NVIDIA A100 PCIe GPU 40GB and 80GB

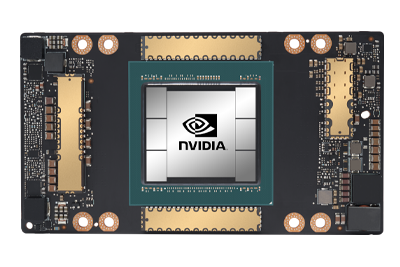

A100 SXM GPU

40GB

A100 SXM GPU

80GB

A100 SXM GPU

40GB

A100 SXM GPU

80GB

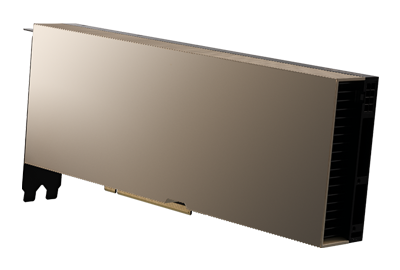

Featuring NVIDIA’s Ampere architecture with Tensor Cores, the NVIDIA V100 is currently the world’s most powerful GPU accelerator for deep learning, machine learning, high-performance computing, and data analytics. This GPU is available in two form factors. One SXM4 based, and this one featuring a PCIe form factor and makes use of a PCIe 4.0 connection offering twice the bandwidth compared to PCIe 3.0. Both the SMX and PCIe form factors are available with either 40GB and 80GB of memory. All of them provide the best performance over the previous generation NVIDIA V100 featuring Volta architecture offering a 20x increase in performance. NVIDIA’s Multi-Instance GPU technology, or MIG, enables the GPU to be partitioned into 7x isolated GPU instances, allowing data centers to dynamically adjust to shifting workload demands.

Featuring 40GB of HBM2 memory or 80GB of HBM2e memory, the NVIDIA A100 PCIe GPUs offers the fastest memory bandwidth of any GPU at 2 terabytes per second (TB/s) to take on the largest data sets and simulations. Dynamic random-access memory (DRAM) utilization is also utilized with an efficiency of up to 95%. Compared to the previous generation Volta-based V100, the A100 delivers a 1.7X higher memory bandwidth. With networks composed of billions of parameters a technique of structural sparsity, which converts some data parameters to zeros can help to simplify the data without compromising the data. For sparce models, the Tensor cores can deliver up to twice the performance, which is useful for AI and model training. NVLink architecture is available to the PCIe GPUs via the 3rd generation NVLink Bridge combining up to two GPUs essentially enabling the individual GPUs to function as a single unit with a doubling of available assets. With an output delivering up to 312 teraFLOPS (TFLOPS) of deep learning performance the NVIDIA A100 is quite literally offers 20X the tensor floating-point operations per second plus the same increase in performance for tera operations per second, or TFLOPS, for deep learning inference compared to NVIDIA Volta-based GPUs. In summary, the A100 offers 6,912 FP32 CUDA cores, 3,456 FP64 CUDA cores, and 422 Tensor cores, a significant increase over the 5,120 CUDA cores offered by the V100.

With a maximum thermal design power (TDP) of 250W for a 40GB PCIe GPU or 300W for the 80G PCIe GPU, cooling is essential for performance. The PCIe NVIDIA A100 40GB and 80GB feature passive cooling and are designed to be integrated into a server chassis with air flow engineered to provide maximum cooling potential. Effectively removing heat from the chassis enables the GPUs to perform at maximum efficiency.

While the NVIDIA A100 PCIe GPU is not designed for gaming or specifically for use in workstations, it is designed for data center applications and is a direct replacement to the NVIDIA Volta architecture-based V100. The die used for this GPU is significantly larger than that of the V100 GPU while offering higher density with a 7nm process, which diverges from the 12nm process used in the previous generations. These architectural improvements, new ways of processing the data, and significantly more memory all contribute to the ultra-performance of NVIDIA’s new flagship GPU.