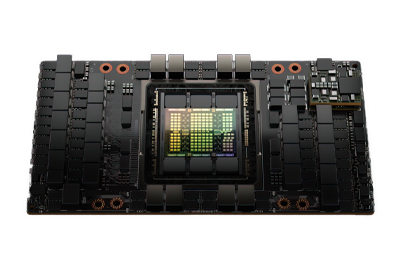

Nvidia H100 SXM5 GPU

The NVIDIA H100 SXM5 GPU supports 528 4th generation Tensor cores that can perform up to 989 teraFLOPS. An NVLink board connects 4x to 8x GPUs at up to 900GB/s using a PCIe Gen5.0 interface. Using the H100 GPU delivers and impressive 30x inference speedup on large language models, plus is 4x faster training. It features 2nd generation multi-instance GPU, or MIG, meaning that it can provide up to 7x separate virtual instances for multiple users. It has a base clock of 15890MHz with a boost clock of 1980MHz. AI Enterprise provides an accelerated path to workflows like AI Chatbots, recommendation engines, vision AI and more and is available by subscription enabling Administrators to quickly get up to speed with pre-defined workloads. This card does not have any external ports for connecting monitors.

With 80GB of HBM3 memory connected via a 5120-bit memory interface that runs at 1313MHz. The NVIDIA H100 SXM GPU resides on an NVLink board connecting 4x to 8x GPUs. The SXM form factor GPU delivers a memory bandwidth of 3.35TB/s compared to 2TB/s using the PCIe-based card.

With the SXM form factor, this card draws power from an 8-pin EPS power connector and is connected to the rest of the system via a PCIe 5.0 x16 interface. It has a power draw of up to 700W maximum.

Built on a 4nm process, the NVIDIA H100 SXM5 80GB GPU is based off the GH100 graphics processor, it does not support DirectX 11 or DirectX 12, and as a result, gaming performance is limited. I addition to the phenomenal performance provided by 528 Hopper architecture tensor cores, it also features 16896 shading units, 528 texture mapping units and 24 ROPs (Raster Operations Pipeline). This is the top performing card for AI applications and accelerated computing performance for data center platforms. NVIDIA AI Enterprise software is an add-on but does simplify the adoption of AI with AI frameworks and tools to build accelerated AI workflows.